Interfaces, metaphors & multi-touch

August 28, 2006 | 13:19

Companies: #apple #microsoft #research #xerox

Finger Massage

Older touchscreens had lots of limitations. They only detected a single point of contact. This locks the device into two dimensions - you can only select items or push them around the 2D landscape. Recently these limitations have been lifted, and now a new type of touchscreen tech, called multi-touch, is coming of age.Multi-touch panels can detect multiple points of contact. Initially you might only think of using multi-touch as you would a mouse – to interact directly with a 2D environment, and some multi-touch applications seem trivial. For example the iBar is a very hardwearing multi-touch display using a projection system. It’s almost a pity the iBar looks like such a gimmick.

Multi-touch is anything but a gimmick, but to manipulate 3D space with the mouse we need to add extra inputs. If using a normal touchscreen is like using a single mouse then multi-touch is like using ten - or more! In fact, you’ve probably seen a multi-touch in action – or a representation of one. If you’ve seen Minority Report then you have, the diminutive Mr Cruise and his cronies use one to edit video.

Cruise gets on with a multi-touch interface.

All the scenes where he’s flapping his arm around aren’t actually as far from reality as you might think, because those are the kind of intuitive gestures and feedback that you can expect to use and receive from a multi-touch device when it’s used with an appropriate user interface.

Appropriate interfaces are needed, and fortunately they’re almost ready to hit the streets. Jeff Hann’s work on multi touch interfaces uses simple gestures to manipulate objects on the screen. When you watch him use the interface, all the motions he makes to control the world seem intuitive and easy to learn and experiment with.

While Jeff’s work may be confined to the world of research, both Microsoft and Apple are working on their own versions behind the scenes. Microsoft's PhotoSynth uses an interface that seems designed to be used with a multitouch display. While the application itself is incredibly cool, it’s interesting to see how the interface works, seeming very similar to the photo browser Jeff uses in his multi-touch demonstration. You can hear the operator’s mouse wheel whizzing away as he jerkily zooms into the images; in Jeff’s demonstration the same actions are performed effortlessly by placing two fingertips on the screen and moving them away from one another.

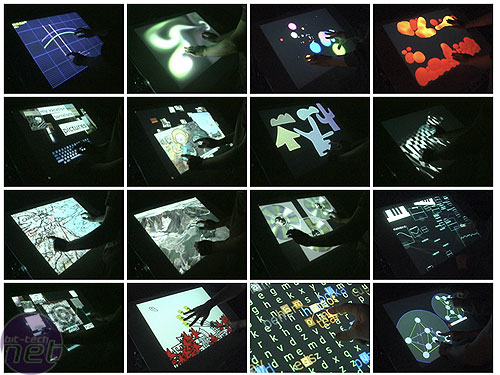

Jeff's multi-touch work has a variety of applications.

Multi-touch even looks good for gaming, as this WoW demonstration shows. You’ll notice that he uses many of the same gestures to select and zoom as Jeff uses in his demonstration. To make it more sci-fi they’ve added voice recognition to the interface. Unlike the multi-touch interface, voice recognition is in its infancy (it's better, but still needs plenty of improvement). If you need to use voice control then it’s possible; you need a quiet environment and clear pronunciation for the computer to correctly recognise each word. In this demo the chap just using a limited number of phrases, and he’s being careful to separate his phrases with a pause. So he’ll say “unit one”, pause long enough for the computer to recognise the phrase is complete, and then say his next phrase “attack”. With sufficient training of the recognition software 90% or above accuracy is possible – but for the able bodied it’s easier to use the mouse and keyboard than mess about.

Conclusions

While having a philosophical chat with your computer about the price of beans may be a long way off, multi-touch is not. Apple is reported to have filed some interesting multi-touch patents, and you can be sure that if Apple has it Microsoft will feel the need to have its own version. No doubt our interfaces will be based on the desktop metaphor for a long time yet - after all it took 110 years and a new Millenium to remove punch cards from our computer data - but let’s hope it doesn’t take another century before the same can be said of the physical interface.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.